What is Colocation?

Colocation Data Centers—a.k.a. “colo”—is a service model in which organizations rent space (racks, cabinets, private cages or an entire data hall) within a third-party data center and install their own servers, storage and networking gear. The provider delivers all the facility infrastructure—power, cooling, physical security and carrier-neutral network connectivity—while the tenant retains full control over their hardware and software stack.

Colocation data centers emerged in the late 1990s and early 2000s as a solution for businesses that wanted to offload the capital‐intensive burden of building and operating their own server farms. At that time, enterprises faced rising demand for reliable Internet connectivity and 24×7 uptime, but building a fully redundant power and cooling infrastructure was prohibitively expensive.

Colocation providers stepped in to lease rack space, power feeds, and network access in their facilities, allowing customers to install their own hardware while benefiting from economies of scale in utilities, security, and connectivity.

Early adopters were predominantly ISPs and dot-com startups, but by the mid-2000s, larger enterprises recognized colo as a way to achieve higher availability SLAs without tying up capital, leading to an explosion of carrier-neutral “meet-me rooms” and interconnection points in key metro markets.

Over the past two decades, the colocation business has evolved far beyond simple space‐and‐power rentals. Providers have layered on managed services—remote hands, hardware monitoring, even full‐stack server management—transforming colo from a “bring-your-own-kit” model into a flexible, on-demand infrastructure supply.

The rise of cloud and hybrid-cloud architectures drove further innovation, as data centers became strategic connectivity hubs offering direct on-ramps to AWS, Azure, and Google Cloud. More recently, burgeoning demand for AI workloads and edge computing has spawned micro-colos at 5G aggregation sites and modular container deployments, bringing ultra-low latency compute closer to users.

Today’s colocation landscape is a dynamic ecosystem that blends traditional rack rentals with DCaaS offerings, sustainability commitments, and programmable interconnection fabrics—reflecting how the industry has continually adapted to meet the needs of an increasingly digital and distributed world.

Market Outlook for Colocation

Pure “bring-your-own-servers, rent-a-rack” colocation remains a large and growing market—but it’s not the only game in town any more. Here’s what’s happening:

- Colocation itself is still expanding

- The global ‘pure colocation’ revenue market was worth about $69.4 billion in 2024 and is forecast to hit $165.5 billion by 2030 at a 16 percent CAGR Grand View Research. These figures focus specifically on pure “colocation” revenues—i.e. the rental of rack, cage, power, cooling and basic interconnect services. It typically excludes managed services, value-added edge or DCaaS bundles.

- The global colocation market that includes a broader ‘infrastructure-as-a-service’ colocation plus DCaaS, managed hosting, edge-computing nodes and tightly integrated connectivity, was valued at US$130.2 billion in 2024 and is forecast to grow at a 14.65 % CAGR through 2034, reaching US$569.6 billion GlobeNewswire.

- But higher-value, on-demand models are growing even faster

- Data Center as a Service (DCaaS)—where you rent fully managed compute, storage, networking, power and cooling as a utility—jumped from $105.5 billion in 2024 to $127.4 billion in 2025, and is projected to exceed $694 billion by 2034 at a 20.7 percent CAGR Precedence Research.

- Many colocation operators now offer “rack plus everything else” bundles—managed hands, DCIM, security/compliance packages, and cloud-interconnect credits—blurring the line between pure rack rentals and DCaaS Technavio.

- Hyperscalers and keen enterprises bypass pure colo altogether

- Companies such as Google, AWS and Meta more often build dedicated hyperscale campuses or lease turn-key DCaaS capacity rather than simply renting cabinets in a multi-tenant hall The Wall Street Journal.

- However, when entering new geographic regions, hyperscalers frequently leverage colocation providers as an initial stepping-stone—rapidly deploying availability zones and establishing presence while demand grows. This transitional approach enables them to scale workloads and evaluate the market before committing capital to designing and constructing their own purpose-built data centers.

- There is a clear shift away from customers wanting only raw space + power + cooling. Instead, most new contracts bundle managed services, consumption-based billing, and cloud/edge integration.

- If you’re planning a data center strategy, expect pure colo to remain a foundational layer—but design for higher-level “as-a-service” add-ons (DCaaS, managed colo, direct cloud/edge interconnect) that many customers now demand.

How Colocation Works

At its core, colocation brings together the four essential pillars of a modern data-center facility—space & power, cooling & environment, connectivity & interconnection, and operational services—so that organizations can deploy and manage their own IT equipment without having to build or run the underlying infrastructure themselves.

Tenants simply lease cabinet or cage space with metered, redundant power feeds and let the provider’s UPS, generators and on-site fuel reserves ensure continuous energy delivery.

Meanwhile, precision cooling systems—from raised-floor air distribution to liquid-cooled racks—maintain optimal temperatures and humidity levels, complete with hot- and cold-aisle containment for efficiency and reliability.

Carrier-neutral “meet-me” rooms let customers plug into multiple networks, cloud on-ramps and exchange points for low-latency connectivity, and a suite of operational services—remote hands, cross-connect provisioning, SLAs and real-time monitoring—rounds out the offering, delivering a turnkey environment that keeps your servers running securely and smoothly around the clock.

The Four Core Pillars of a Colocation Data Center

Space & Power

Leased cabinets with redundant power feeds

Cooling & Environment

Precision cooling and hot/cold-aisle containment

Connectivity & Interconnection

Carrier-neutral cross-connect and IX access

Operational Services

Remote hands, cabling, and SLA-backed monitoring

- Space & Power

- Tenants lease standard cabinets (42–48U), half-racks, full-racks, larger cages, or even customized Open Compute Project (OCP) racks designed for high-density and hyperscale deployments. Each space comes with metered, redundant power feeds and dedicated PDUs, accommodating a diverse range of IT requirements.

- Providers maintain UPS systems, backup generators and on-site fuel to guarantee continuous energy delivery.

- Cooling & Environment

- Environmentally controlled rooms use CRAC/CRAH units, raised-floor air distribution or liquid cooling for high-density racks.

- Humidity, airflow and hot-aisle/cold-aisle containment are monitored to OEM specifications or clients’ specific requirements.

- Connectivity & Interconnection

- Carrier-neutral “meet-me rooms” let tenants cross-connect to multiple carriers, ISPs, cloud on-ramps and partners.

- Many sites host Internet exchange points (IXPs), enhancing latency and throughput for distributed applications.

- Operational Services

- Remote Hands & Eyes: Day-to-day tasks (racking, cabling, troubleshooting) performed by on-site engineers.

- Cross-Connects & Cabling: Physical linking of tenant systems to carriers, clouds and other customers.

- SLAs & Monitoring: Tiered service levels for power, network availability and response times.

Business Benefits of Colocation

Colocation data centers deliver a turnkey platform that combines world-class infrastructure with operational expertise, enabling organizations to achieve high availability, rapid growth, and strict security—all while keeping costs aligned to actual usage.

By leasing space and power in a carrier-neutral facility, customers tap into multi-tiered redundancy for uninterrupted uptime, elastic scalability to add racks on demand, and a consumption-based Opex model that avoids heavy CapEx outlays.

Robust physical and network security measures—ranging from biometric access controls and CCTV to enterprise-grade firewalls and fire suppression—safeguard critical assets, while on-site engineering and service-level agreements free IT teams to focus on innovation rather than facility upkeep.

Moreover, integrated ecosystems and software-defined “on-ramps” to hyperscale clouds, plus emerging edge-ready micro-facilities at 5G sites, ensure seamless hybrid-cloud and ultra-low-latency deployments for today’s most demanding applications.

- Reliability & Uptime: Multi-tiered redundancy (power, cooling, network) ensures industry-leading availability

- Scalability: Rapid capacity expansion—just add racks or commit more space—without capital-intensive builds.

- Cost Efficiency: OpEx model avoids large upfront CapEx; billing is consumption-based (space, power, bandwidth).

- Physical & Network Security: Biometric access, CCTV, fire suppression, and enterprise-grade firewalls.

- Focus on Core Business: Offload facility management to experts and free up IT staff for revenue-generating projects.

- Ecosystem & Interconnection: Proximity to cloud providers, partners and carrier hubs accelerates hybrid-cloud deployments.

Beyond the classic benefits, modern colocation now includes:

- Digital Ecosystems & On-Ramps: Instant connectivity to hyperscale clouds and SaaS via software-defined interconnection platforms

- Edge-Ready Deployments: Micro-facilities co-located at 5G base stations offering ultra-low latency for IoT/AR/VR—cementing colo’s role in edge computing.

Energy Performance and Responsibilities

When you split responsibility for a facility’s “guts” (power, cooling, building) from its “brains” (servers, storage, network), achieving holistic energy and sustainability targets does become more complex—but the industry has developed both governance models and tooling to bridge that divide.

The Shared-Responsibility Challenge

In a typical colo deployment:

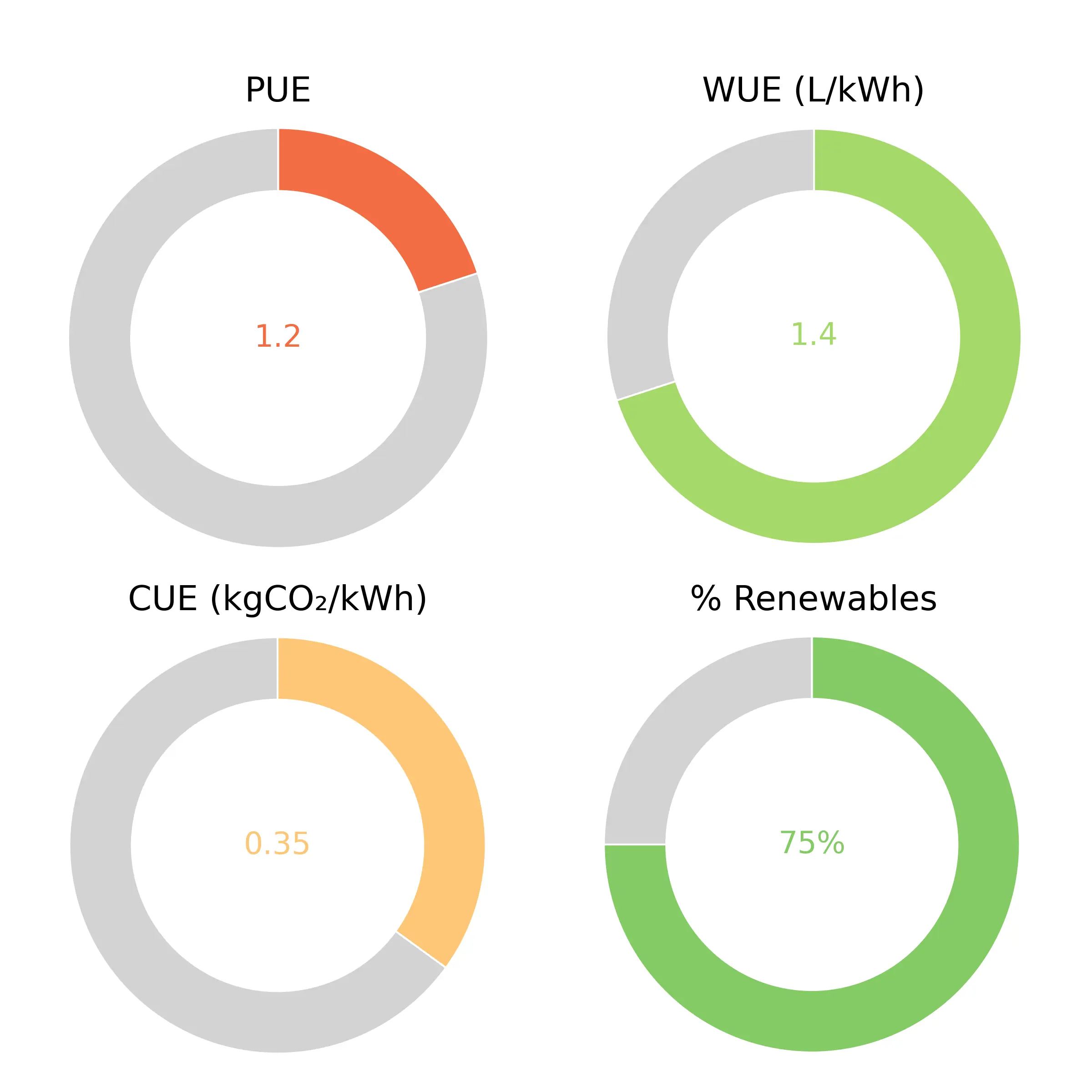

- Provider owns and optimizes the mechanical plant—CRAC units, chillers, generators, UPS—thus controls the facility’s PUE (Power Usage Effectiveness), WUE (Water Usage Effectiveness), and base CUE (Carbon Usage Effectiveness)

- Tenant installs and manages IT equipment, which can vastly differ in power density, utilization, and life-cycle efficiency. That IT footprint directly influences the IT-side of PUE and CUE but sits outside the provider’s direct control.

Taken together, the composite PUE/CUE/WUE you actually achieve depends on both parties’ actions, making end-to-end performance harder to guarantee.

Governance & Transparency via SLAs and Dashboards

To manage this split accountability, leading colo providers now embed sustainability into their contracts and customer portals:

- Energy-efficiency SLAs: 74 % of providers report that customers expect “contractually binding efficiency and sustainability commitments,” with explicit PUE targets or carbon caps in lease agreements.

- Sub-metering & reporting: Tenants can opt into per-rack or per-cage submeters, feeding both parties’ dashboards. This lets an enterprise see exactly how much M&E (mechanical & electrical) overhead versus IT load they’re consuming.

- Sustainability Dashboards: The sample sustainability dashboard presents real-time PUE, WUE, CUE and percentage-renewables metrics for each deployment—displayed as intuitive gauges that immediately flag where efficiencies or risks lie. By surfacing energy usage, water consumption, carbon intensity and on-site renewable coverage in one pane, it enables both providers and tenants to jointly monitor performance and report against Scope 1, Scope 2 and partial Scope 3 targets.

Another example of a sustainability dashboard could show per-deployment electricity use, carbon emissions (location- and market-based), and renewable coverage—adding to the joint monitoring and reporting against scopes 1, 2, and partial scope 3.

What the Market Is Demanding

Enterprises—particularly in finance, retail, and government—are under growing regulatory and procurement pressure to:

- Hit science-based targets (e.g., net-zero by 2030).

- Certify via third-party standards (ISO 50001, ISO 14001, LEED, BREEAM).

- Report consistently through CDP, TCFD, or regional frameworks like the EU’s Corporate Sustainability Reporting Directive (CSRD).

- Procure renewables via Power Purchase Agreements (PPAs) or green tariffs.

Because tenants can’t guarantee the facility’s carbon mix by themselves, they now insist on colo providers offering either on-site renewables, PPAs, or “green attestations” as part of their lease.

Emerging Solutions & Service-Layer Innovations

Providers have responded with a growing suite of sustainability-focused capabilities:

- Renewable Energy Coverage: Bundled PPAs, virtual PPA offerings, or direct solar/wind on-site. Equinix, Digital Realty and others now let customers “lock in” 100 % renewables for their footprint (Business Insider).

- AI-Driven Optimization: Digital Realty’s Apollo AI tool runs real-time analytics on both facility and tenant loads to tweak cooling setpoints, battery dispatch, and even site-level microgrid operations—helping hit 60 % emissions-reduction targets by 2030 (Business Insider).

- Managed IT & Orchestration Layers: Beyond pure rack space, operators now offer “full-stack” rack units where they manage servers and virtualization on behalf of the customer—bringing the entire PUE/CUE/WUE picture back under one roof.

- Circular-Economy Programs: Equipment “take-back,” e-waste recycling, and refurbished hardware offerings help tenants reduce embodied carbon in their IT fleets.

Bottom Line:

While pure colo naturally divides accountability, the industry’s trajectory is clear: jointly managed SLAs, transparent dashboards, and integrated sustainability services are becoming table stakes. Enterprises still get the control they want over their compute stack, but both sides now share data, targets, and even tools—ensuring that end-to-end energy, water, and carbon metrics can be controlled, audited, and optimized as one unified outcome.

Cutting Edge Colocation

To keep pace with an evolving colocation market leading providers are redefining what it means to collocate. From earth-friendly construction and AI-driven operations to edge-centric micro-facilities and sovereign enclaves, these innovations aren’t mere bells and whistles—they address the pressing challenges of sustainability, latency, security, and regulatory complexity facing modern enterprises.

Here is how the data-center industry is deploying green concrete and microgrids, embedding machine learning into every facet of facility management, and knitting together hybrid-cloud fabrics—unlocking new levels of efficiency, resilience, and performance for mission-critical workloads.

Sustainability and Green Construction

Meta’s Rosemount, Minnesota data center is leading the way in AI-optimized green concrete, using Meta’s open-source BoTorch and Ax frameworks to run multi-objective Bayesian optimizations across thousands of mix variations and identify low-carbon formulations that maintain structural integrity while slashing embodied CO₂ emissions by up to 30% compared to conventional concrete.

Parallel academic work has demonstrated the power of conditional variational autoencoders (CVAEs) to generate custom concrete recipes from limited lifecycle-impact datasets, accelerating the transition from lab-scale models to full-scale pours in weeks instead of months—critical for hyperscale and edge deployments where speed and sustainability both matter (Engineering at Meta, arXiv).

Alongside greener construction materials, on-site renewables and modular microgrids are reshaping colocation’s energy footprint. Operators are now pairing solar PV arrays, fuel cells or biogas generators with battery storage and smart controls to offset up to 80% of Scope 2 emissions while bolstering resilience during grid disruptions.

At the same time, industry frameworks—such as the Uptime Institute’s “Guide to Environmental Sustainability Metrics for Data Centers” and evolving GHG Protocol methods like 24/7 carbon matching—give providers and tenants the transparency needed to track Scope 2 and Scope 3 emissions in real time and certify “near-zero carbon” operations by 2030.

When integrated with AI-powered energy management platforms that actively adjust cooling setpoints and optimize power distribution, these advancements enable colocation facilities to achieve ambitious PUE, WUE, and CUE objectives while providing clients with measurable sustainability outcomes.

Edge-Ready Colocation and 5G Integration

Edge-ready colocation brings compute and storage literally to the network’s doorstep, embedding micro-data centers within or adjacent to 5G radio access nodes so that IoT sensors, AR/VR headsets, and real-time analytic engines can communicate with processing infrastructure over single-digit-millisecond links.

Analysts report at least 1,500 pilot edge data center deployments worldwide as of 2023, underscoring the rapid maturation of this distributed model (GlobeNewswire). The global edge data center market, valued at roughly US$6 billion in 2023 and projected to grow to US$17 billion by 2032 at a 12.5 % CAGR, reflects both hyperscale and colo operators racing to build micro-facilities that meet 5G’s stringent latency and bandwidth demands (DataIntelo).

Real-world telco–colocation partnerships highlight how edge integrations are unlocking new service models. In the United States, Dish Wireless has deployed on-premise edge data centers in key markets to host Open RAN functions and MEC workloads, interconnecting them via a dedicated high-speed backbone to ensure sub-10 ms performance for applications like live video analytics and smart-factory controls (Cisco).

These collaborations exemplify how colocation providers and carriers are co-developing edge-optimized offerings that deliver ultra-low latency, localized resilience, and simplified integration into broader hybrid-cloud and multi-access edge computing (MEC) ecosystems.

AI-Driven Operations and Predictive Analytics

Data centers today generate vast streams of telemetry—from rack‐level power draw and inlet/outlet temperatures to airflow velocities and equipment vibration. AI‐driven DCIM platforms ingest this continuous data flow, applying machine‐learning models and anomaly‐detection algorithms to spot subtle deviations long before they trigger failures.

Similarly, Digital Realty’s Apollo AI platform analyzes power, cooling and environmental inputs across its global footprint to dynamically tune CRAC setpoints and compressor staging, driving real-time PUE improvements of 5–7% and cutting cooling energy use by up to 8% during peak loads (digitalrealty.com).

Underpinning these vendor solutions, the broader AIOps movement shows that intelligent monitoring can improve Mean Time to Detect (MTTD) by 15–20% and slash critical incidents by over 50%—translating to 5–10% overall energy and O&M cost reductions in data-center operations.

By embedding predictive analytics into alarms, workflow orchestration and automated remediation, modern colocation providers can guarantee higher uptime SLAs and more consistent PUE targets. Early adopters report fewer unplanned outages, longer asset lifecycles, and a streamlined operations staff, freeing technical teams to focus on capacity planning and service innovation rather than firefighting.

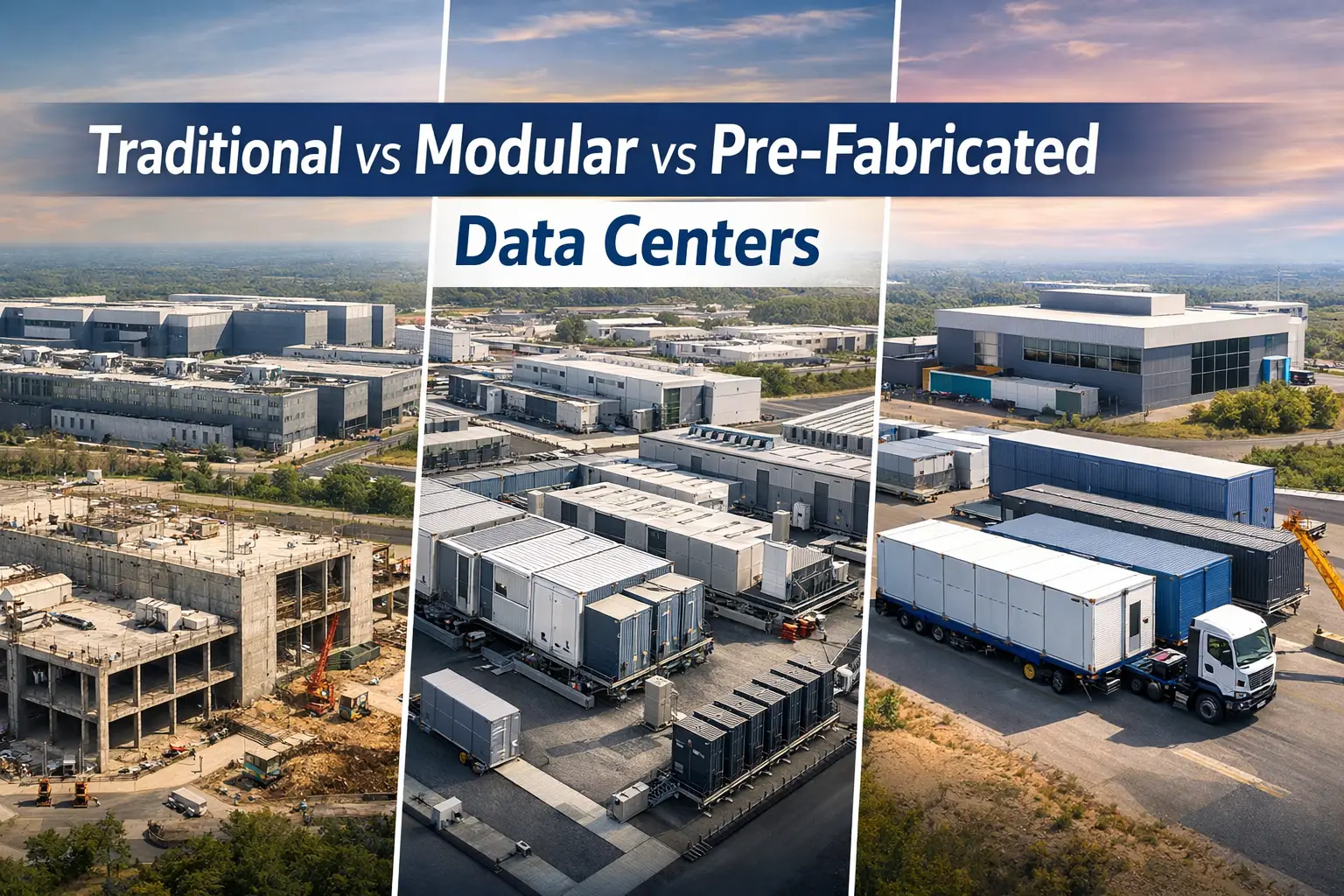

Modular & Containerized Data Centers

Prefabricated, skid-mounted and containerized data centers have revolutionized deployment timelines by compressing what once took months into mere weeks. According to Research and Markets via GlobeNewswire, the global prefabricated and modular data centers market was valued at US$4.24 billion in 2024 and is projected to grow at a 15.39 % CAGR to reach US$17.76 billion by 2034 (GlobeNewswire).

These containerized units bundle racks, power distribution units and integrated liquid-cooling loops into a single “plug-and-play” envelope. On the power side, prefabricated electrical skids—complete with UPS modules, switchgear and battery storage—are factory-tested and shipped ready for rapid on-site hookup, eliminating the complexities of coordinating multiple trades (pcxcorp.com).

Hybrid Cloud Interconnectivity

These direct connections simplify hybrid-IT architectures by eliminating the need to manage separate private WAN links for each provider and by centralizing interconnection services within the data center pod (CoreSite Experience).

Together, these innovations ensure that tenants can shift workloads seamlessly between their own racks, edge colos and hyperscale clouds with enterprise-grade performance, security and manageability.

Data Sovereignty & Regulatory Compliance

Layered atop these are the forthcoming EU AI Act’s data-governance rules for any AI systems hosted on-prem, plus national “cloud-sovereignty” laws (e.g., Germany’s Cloud Strategy Act or China’s Multi-Level Protection Scheme) that demand data remain within jurisdictional boundaries.

To satisfy these overlapping mandates—and to give customers confidence in their compliance posture—colo operators are now offering dedicated “digital sovereignty” solutions that guarantee data residency, auditability and regulatory alignment.

- Sovereign Enclaves – Physically and logically isolated cages or pods, complete with separate power feeds, key-management services and dedicated network fabrics, ensure that sensitive workloads never co-mix with other tenants’ data (CoreSite).

- On-Shore Clouds & EUCS Certification – Some operators partner with local governments or cloud platforms to deliver nationally governed clouds (e.g., Azure Germany, AWS Europe-Zurich, Oracle France), often certified under the EU Cloud Services Scheme (EUCS) for baseline security and interoperability (JD Supra).

By packaging these capabilities into colo offerings, providers bridge the gap between facility-level responsibilities (PUE, physical security) and tenant-driven IT governance—delivering turnkey environments where data sovereignty and regulatory compliance are baked into both the infrastructure and the service contract.

Section Summary

In Summary for this section operators and tenants alike must look beyond traditional CapEx and OpEx calculations to capture the full economic and environmental footprint of their deployments.

Modern Total Cost of Ownership (TCO) models now incorporate carbon levies, grid-access and demand-charge fees, and even water usage costs—ensuring that choices around site location, utility contracts and equipment density reflect both financial and sustainability goals.

At the same time, circular-economy strategies—such as IT-asset refurbishment, component remanufacturing and end-of-life recycling programs—are gaining traction, allowing data centers to close material loops, reduce embodied carbon and lower e-waste volumes while preserving core assets through multiple hardware lifecycles.

On the technology front, a deeper understanding of workload profiles is critical. GPU-heavy AI training clusters demand constant high-density power and robust liquid-cooling, whereas bursty analytics pipelines benefit more from flexible rack provisioning and dynamic VM orchestration.

Alongside tracking Power Usage Effectiveness (PUE), leading facilities now measure Water Usage Effectiveness (WUE) to optimize evaporative and adiabatic cooling strategies, further driving resource efficiency.

Finally, digital-twin simulations have emerged as a powerful planning tool—enabling operators to model cooling airflow, power-distribution contingencies and disaster-recovery scenarios virtually, validate capacity expansions before breaking ground, and rehearse fault-response drills without ever touching the switchgear.

Together, these insights form the foundation of a truly “next-gen” colocation strategy that is financially transparent, environmentally responsible, and rigorously engineered for the demands of modern IT.

Choosing a Colocation Provider

Selecting the right colocation provider is about more than just securing floor space—it’s about finding a partner whose infrastructure, services, and commitments align with your performance, security, and sustainability goals.

From geographic resiliency and low-latency connectivity to rigorous compliance certifications and transparent SLAs, each aspect contributes to the reliability and cost-effectiveness of your deployment. Below are the key factors to evaluate when choosing a provider that will support both your current needs and future growth.

- Location & Proximity: Latency impact, natural-disaster zones, and regulatory/data-sovereignty requirements.

- Connectivity Options: Number of carriers, direct cloud connections (e.g., AWS Direct Connect, Azure ExpressRoute).

- Certifications & Compliance: ISO 27001, SSAE 18, PCI DSS, HIPAA, GDPR/CSRD readiness.

- Service Levels & Transparency: SLAs covering PUE targets, network and power availability, response times.

- Sustainability Commitments: Reporting on PUE/WUE/CUE, renewable energy coverage, and carbon-neutral goals.

- Remote Hands & DCIM: Availability of on-site support and integration with data center infrastructure management tools.

Future Trends

As the data-center industry continues to evolve at breakneck speed, a new wave of innovations is poised to redefine how organizations consume and secure infrastructure. In the coming years, fully managed AI and data-center services will merge into seamless on-demand offerings, while zero-trust architectures will bake identity and least-privilege controls into every layer of operations.

Meanwhile, 5G-native network slicing will guarantee predictable performance for latency-sensitive workloads, and “sustainability as a service” will transform environmental responsibility from a marketing promise into a measurable, SLA-backed reality.

- AIaaS & DCaaS Convergence: Fully managed rack-to-AI pipelines offered as on-demand services.

- Zero-Trust Data Centers: Embedding identity-centric security at every layer.

- 5G-Native Slicing: Dedicated network slices for colo tenants with real-time QoS.

- Sustainability as a Service: End-to-end carbon and water-neutral SLAs with automated reporting.

Colocation remains the foundational layer for hybrid and edge-driven digital architectures—combining the control of self-managed infrastructure with the economies, ecosystems and innovation enabled by professional, carrier-neutral data centers.

Ready to Elevate Your Colocation Strategy?

Azura Consultancy’s Expertise in Colocation Data Centers

Azura Consultancy with a proven track record in data center consulting, partners with organizations at every stage of their colocated data-center journey, beginning with strategic planning and feasibility. We conduct in-depth feasibility studies that evaluate technical, financial, and operational viability—assessing network requirements, site conditions, market dynamics, and regulatory constraints—to identify risks and opportunities before you commit to build or lease .

Our technical due-diligence practice then delivers unbiased assessments of power and cooling architectures, redundancy schemes, physical security measures, and scalability, ensuring your project meets both industry standards and investor expectations .

For M&A or financing scenarios, we extend these evaluations into comprehensive Lender’s Due-Diligence Advisory (LTA) services—covering legal, commercial, and environmental dimensions so lenders and stakeholders can make informed decisions with confidence.

Beyond upfront analysis, Azura’s experts support business-plan development, total-cost-of-ownership modeling, and regulatory compliance roadmaps that factor in carbon levies, grid-access fees, and sustainability targets.

Our project-management team oversees end-to-end delivery—from vendor selection and design validation to construction supervision and commissioning—leveraging rigorous quality controls and real-time DCIM integration for seamless handover. Post-deployment, we offer ongoing performance optimization—tuning PUE/WUE metrics, implementing circular-economy asset-reuse programs, and deploying digital-twin simulations for capacity planning and disaster-recovery rehearsals—so your collocated infrastructure remains resilient, efficient, and aligned with evolving business goals.